Generative AI App LangChain Hugging Face Open Source Models Tutorial

Generative AI App LangChain Hugging Face Open Source Models Tutorial

Introduction

If you are looking to build a simple Generative AI App using open source models, then you are at the right place. When I was looking online for a tutorial to build a simple Generative AI App, I usually find videos which are very long and complex to follow or I find courses which are also very long and very time consuming. Hence I decided to come up with this tutorial.

In this tutorial we will focus on building a Generative AI App using simple Python code, LangChain, Open Source models from Hugging Face and Streamlit UI. In this tutorial we will build a simple Generative AI App which creates a caption for any uploaded image, then creates a short story based on the generated caption and then creates an audio file of the generated story which you can listen to.

Basics

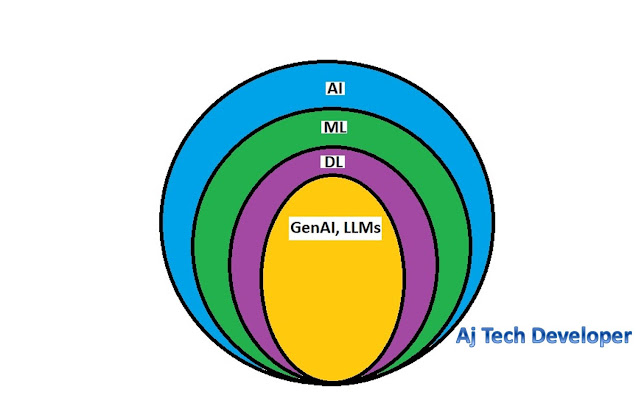

We will start with some basic concepts and then move on to building this app. We see a lot of abbreviations being used in the context of AI today like, AI, ML, DL, Generative AI, LLMs etc..

Let us understand the difference between them and what they mean at a very high level. The image below should be able to give a clearer picture of this.

In simple terms Artificial Intelligence is usually defined as the science of making computers do things that require intelligence when done by humans. AI involves machines that can perform tasks that are characteristic of human intelligence.

ML = Machine Learning.

Machine Learning is a subset of AI. Machine Learning models or programs have the ability to modify itself when exposed to more data; i.e. machine learning is dynamic and does not require human intervention to make certain changes. Machine Learning is a way of achieving AI.

DL = Deep Learning.

Deep Learning is a subset of Machine Learning. Deep Learning is the study of artificial neural networks (ANN) and related machine learning algorithms that contain more than one hidden layer. In simple terms, Deep learning is one of many approaches to machine learning. Deep learning was inspired by the structure and function of the brain, namely the interconnecting of many neurons. Artificial Neural Networks (ANNs) are algorithms that mimic the biological structure of the brain.

GenAI, LLMs = Generative Artificial Intelligence, Large Language Models.

GenAI, LLMs are a subset of Deep Learning. Generative AI is a type of AI which refers to deep learning models which can generate high quality text, images, videos and other content based on the data they were trained on. Usually these models are Large Language Models (LLMs) which have been trained using a huge set of data.

Hugging Face

If you want to build Generative AI Apps, you should definitely know about Hugging Face. Hugging Face is a platform, community and repository of Open Source models, datasets and applications which helps users test, build, train and build machine learning models. They are on a mission to democratize Artificial Intelligence.

To know more about Hugging Face, please check the Hugging Face website.

LangChain

LangChain is a framework to simplify the process of developing applications powered by large language models (LLMs)

To know more about LangChain, please check the LangChain website.

Requirements to Run the Application:

1. Intellij IDE with Python Community Edition Plugin or PyCharm Community Edition or any other IDE to work on Python code.

1. Intellij IDE with Python Community Edition Plugin or PyCharm Community Edition or any other IDE to work on Python code.

Using the simple steps below you can create your own GenAI App.

Step 1: Sign Up on Hugging Face Website and create API Token

Sign up on Hugging Face Website, then go to

Profile -> Settings -> Access Tokens, click on New token button and create a new token for your GenAI App

Step 2: Image to Text: Generate a caption for an uploaded image

On Hugging Face Website, click on Tasks and then click on Image-to-Text

You can use any of the models mentioned in this page. I am using model="Salesforce/blip-image-captioning-base"

You can use the code snippet under the heading Inference as below

Here is the related Python function codefrom transformers import pipeline

def image_to_text(url):

caption_creator = pipeline("image-to-text", model="Salesforce/blip-image-captioning-base")

text = caption_creator(url)[0]["generated_text"]

print(text)

return textStep 2: Caption to Story

Here LangChain is used to create a template for a String prompt, which is then passed to the LLM to process and generate the desired output.

Here I am using the following String prompt to create a short story from the caption that was generated in the previous step:

You are a story teller;

You can generate short stories based on a simple narrative

Your story should be no more than 60 words.

In Hugging Face under Tasks -> Natural Language Processing click on Text Generation

Here is the code for the Python function using LangChain to use a LLM to generate a short story based on a caption provided to it:

from langchain.chains.llm import LLMChain

from langchain_community.llms.huggingface_hub import HuggingFaceHub

from langchain_core.prompts import PromptTemplate

def story_generator(scenario):

template = """

You are a story teller;

You can generate short stories based on a simple narrative

Your story should be no more than 60 words.

CONTEXT: {scenario}

STORY:

"""

repo_id = "HuggingFaceH4/zephyr-7b-beta"

llm = HuggingFaceHub(repo_id=repo_id, model_kwargs={"temperature": 1, "max_length": 64})

prompt = PromptTemplate(template=template, input_variables=["scenario"])

story_llm = LLMChain(prompt=prompt, llm=llm)

story = story_llm.predict(scenario=scenario)

spl_word = 'STORY:'

res = story.split(spl_word, 1)

actual_story = res[1]

print(actual_story)

return actual_storyStep 3: Story to Audio

To convert the generated story to an audio file I am using normal Python code and a Python library gtts

Here is the code for the Python function:

from gtts import gTTS

def text_to_audio(story):

# Language to be used

language = 'en'

# Create Audio

audio = gTTS(text=story, lang=language, slow=False)

# Save the audio converted as a mp3 file

audio.save("audio.mp3")

Step 4: UI

We will bring all of the above steps together in a simple UI using Streamlit UI. Streamlit helps to create Generative AI based web apps quickly using a few lines of Python code. This app can then be hosted for free on Streamlit Cloud and can be accessed over the internet. Here is the main Python function code using Streamlit UI:

import streamlit as st

def main():

st.set_page_config(page_title="Picture to Audio Story", page_icon=":)")

st.header("AI In Action: Transform A Picture To An Audio Story")

st.markdown("This App uses AI to generate a caption for any uploaded picture and a short audio story using the caption.")

uploaded_file = st.file_uploader("Choose an image", type="jpg")

if uploaded_file is not None:

print(uploaded_file)

bytes_data = uploaded_file.getvalue()

with open(uploaded_file.name, "wb") as file:

file.write(bytes_data)

st.image(uploaded_file, caption="Uploaded Image", use_column_width=True)

scenario = image_to_text(uploaded_file.name)

story = story_generator(scenario)

text_to_audio(story)

with st.expander("Caption"):

st.write(scenario)

with st.expander("Story"):

st.write(story)

st.audio("audio.mp3")

Here is the full code in the file app.py:

import streamlit as st

from dotenv import find_dotenv, load_dotenv

import os

from gtts import gTTS

from langchain.chains.llm import LLMChain

from langchain_community.llms.huggingface_hub import HuggingFaceHub

from langchain_core.prompts import PromptTemplate

from transformers import pipeline

# To be used when running locally

# Also .env file to be created at root folder level

# with token: HUGGINGFACEHUB_API_TOKEN = <Your HuggingFace Hub API Token>

load_dotenv(find_dotenv())

# To be used when deploying to Streamlit Cloud

# hf_token = st.secrets["HUGGINGFACE_TOKEN"]["token"]

# os.environ["HUGGINGFACEHUB_API_TOKEN"] = hf_token

# Text to Audio

def text_to_audio(story):

# Language to be used

language = 'en'

# Create Audio

audio = gTTS(text=story, lang=language, slow=False)

# Save the audio converted as a mp3 file

audio.save("audio.mp3")

# Generate Story using Langchain

def story_generator(scenario):

template = """

You are a story teller;

You can generate short stories based on a simple narrative

Your story should be no more than 60 words.

CONTEXT: {scenario}

STORY:

"""

repo_id = "HuggingFaceH4/zephyr-7b-beta"

llm = HuggingFaceHub(repo_id=repo_id, model_kwargs={"temperature": 1, "max_length": 64})

prompt = PromptTemplate(template=template, input_variables=["scenario"])

story_llm = LLMChain(prompt=prompt, llm=llm)

story = story_llm.predict(scenario=scenario)

spl_word = 'STORY:'

res = story.split(spl_word, 1)

actual_story = res[1]

print(actual_story)

return actual_story

# Image to text

def image_to_text(url):

caption_creator = pipeline("image-to-text", model="Salesforce/blip-image-captioning-base")

text = caption_creator(url)[0]["generated_text"]

print(text)

return text

def main():

st.set_page_config(page_title="Picture to Audio Story", page_icon=":)")

st.header("AI In Action: Transform A Picture To An Audio Story")

st.markdown("This App uses AI to generate a caption for any uploaded picture and a short audio story using the caption.")

uploaded_file = st.file_uploader("Choose an image", type="jpg")

if uploaded_file is not None:

print(uploaded_file)

bytes_data = uploaded_file.getvalue()

with open(uploaded_file.name, "wb") as file:

file.write(bytes_data)

st.image(uploaded_file, caption="Uploaded Image", use_column_width=True)

scenario = image_to_text(uploaded_file.name)

story = story_generator(scenario)

text_to_audio(story)

with st.expander("Caption"):

st.write(scenario)

with st.expander("Story"):

st.write(story)

st.audio("audio.mp3")

if __name__ == '__main__':

main()

Run Application:

1. Create a .env file at the same level as app.py and paste your HuggingFace Token that you created in Step 1 in the format below:

HUGGINGFACEHUB_API_TOKEN = <Your HuggingFace Hub API Token>Please replace <Your HuggingFace Hub API Token> with the your token that you created in Step 1.

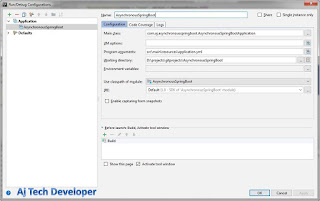

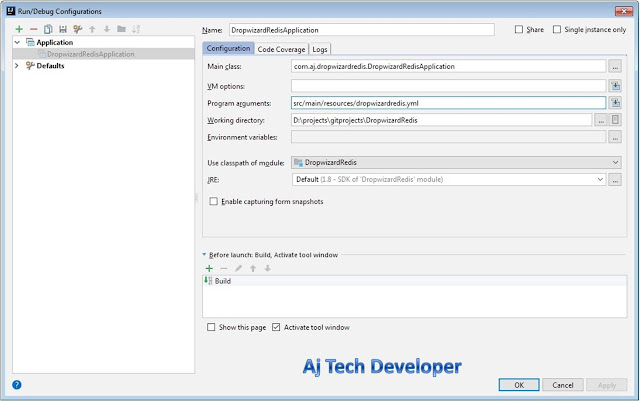

2. To run the application, in PyCharm, create a new Python configuration named as streamlit.

Module Name: streamlit

Parameters: run followed by the location of your Python code file

GenAI App

The GenAI App opens in a new browser window in the localhost URL: http://localhost:8501/

Go ahead and upload any image in JPEG format and wait for the app to run. It will create a caption for the image uploaded, create a short story and generate an audio file of the story.

Here is the image that I uploaded and the story that was created:

Step 5: Host the App

Sign up at the Streamlit Website

Link your GitHub account

Create a requirement.txt file for your app. For this app I have created this file with the following:

streamlit python-dotenv gtts langchain transformers torch tf-keras==2.16.0In app.py comment the code to use .env file and uncomment code to use secrets in Streamlit Cloud. The code will be as below:

# To be used when running locally # Also .env file to be created at root folder level # with token: HUGGINGFACEHUB_API_TOKEN = <Your HuggingFace Hub API Token> # load_dotenv(find_dotenv()) # To be used when deploying to Streamlit Cloud hf_token = st.secrets["HUGGINGFACE_TOKEN"]["token"] os.environ["HUGGINGFACEHUB_API_TOKEN"] = hf_tokenCreate Secrets in Streamlit Cloud to use your Hugging Face Token created in Step 1 in your hosted app.

In Streamlit Cloud for your app click on Settings -> Secrets

Enter code in Secrets in the following format replacing hf_ with your Hugging Face Token:

[HUGGINGFACE_TOKEN] token="hf_"You can access my Generative AI App using the URL below:

https://aipicturetoaudiostory.streamlit.app/

Conclusion and GitHub link:

This tutorial gives a comprehensive overview of building a simple Generative AI App using simple Python code, LangChain, Open Source models from Hugging Face and Streamlit UI. The code for the application used in this post is available on GitHub.

Learn the most popular and trending technologies like Blockchain, Cryptocurrency, Machine Learning, Chatbots, Internet of Things (IoT), Big Data Processing, Elastic Stack, React, Highcharts, Progressive Web Application (PWA), Angular, gRPC, GraphQL, Golang, Akka HTTP, Play Framework, Dropwizard, Docker, Netflix Eureka, Netflix Zuul, Spring Cloud, Spring Boot, Flask and RESTful Web Service integration with MongoDB, Kafka, Redis, Aerospike, MySQL DB in simple steps by reading my most popular blog posts at Software Developer Central.

If you like my post, please feel free to share it using the share button just below this paragraph or next to the heading of the post. You can also tweet with #SoftwareDeveloperCentral on X. To get a notification on my latest posts or to keep the conversation going, you can follow me on X or Instagram. Please leave a note below if you have any questions or comments.

If you like my post, please feel free to share it using the share button just below this paragraph or next to the heading of the post. You can also tweet with #SoftwareDeveloperCentral on X. To get a notification on my latest posts or to keep the conversation going, you can follow me on X or Instagram. Please leave a note below if you have any questions or comments.

Comments

Post a Comment