Machine Learning and NLP Chatbot

Machine Learning and NLP Chatbot

Introduction

Chatbots are able to translate and interpret human natural language input through a combination of NLP (Natural Language Processing) and Machine Learning. In this post I will show you how this can be done. This post is the second part of the tutorial on chatbots. To learn a few basic concepts and how to build a simple chatbot using NLTK, please refer to my first part tutorial: NLTK Chatbot Tutorial.

I have named the chatbot as SmartBot. The SmartBot presented in this post, works in 3 basic modes:

I have named the chatbot as SmartBot. The SmartBot presented in this post, works in 3 basic modes:

- Chat Mode(return learned responses from previous exchanges)

- Statement Mode(accept a statement or fact and store it in the database)

- Question Mode (accept a question and try to answer it based on previously stored statements)

Requirements to Run the Application

- Anaconda.

- Java.

- MySQL Database

- Intellij IDE with Python Community Edition Plugin.

MySQL Database should be setup and running in your machine. To setup, run and test if the MySQL Database is working fine, please refer to my post on: MySQL Database Setup.

Step 1: Setup Chatbot Environment

Libraries related to MySQL DB, NLTK and Machine Learning need to be installed. In the Anaconda Prompt, execute the following commands one by one:

conda install pymysql conda install nltk conda install numpy conda install scipy conda install pandas conda install scikit-learnTo download NLTK Data, execute the following commands in Anaconda Prompt. Here is a sample of the command execution and results in Anaconda Prompt:

$ python

>>> import nltk

>>> nltk.download('punkt')

[nltk_data] Downloading package punkt to /home/botuser/nltk_data...

[nltk_data] Unzipping tokenizers/punkt.zip.

True

>>> nltk.download('averaged_perceptron_tagger')

[nltk_data] Downloading package averaged_perceptron_tagger to

[nltk_data] /home/botuser/nltk_data...

[nltk_data] Unzipping taggers/averaged_perceptron_tagger.zip.

True

>>> nltk.download("stopwords")

[nltk_data] Downloading package stopwords to

[nltk_data] /home/botuser/nltk_data...

[nltk_data] Unzipping corpora/stopwords.zip.

Download Stanford NLP JARs from: Stanford NLP Website. I have downloaded the version 3.7.0 and the download file name is: stanford-corenlp-full-2016-10-31.zipCreate a file named as config.ini and this file will have the details of all the configurations that will be used by our chatbot. Edit this file as per the DB User/ DB Name/ Java Location/ JAR Location that are present in your machine. Here is config.ini:

[MySQL] server: localhost dbuser: root dbname: softwaredevelopercentral dbcharset: utf8mb4 [Server] listen_host: 0.0.0.0 tcp_socket: 3333 listen_queue: 10 [Java] #required for Stanford CoreNLP bin: D:\Programs\Java\jdk1.8.0_161\bin [StanfordNLP] corejar: D:\projects\other\NLPBot\lib\stanford-corenlp-3.7.0.jar modelsjar: D:\projects\other\NLPBot\lib\stanford-corenlp-3.7.0-models.jar [DEBUG] assoc: False weight: False itemid: False match: False server: False answer: FalseIf you observe, in this file, I have setup the following:

- MySQL DB Details

- Bot Server Host and Port

- Java JDK location to be used by Stanford CoreNLP

- Stanford NLP JAR locations

Creation of Training Data

I have created a CSV file with sentences that have been classified into S - Sentence, Q - Question, C - Clause

This CSV is named as classifySentences.csv and can be found in the data folder in the project structure.

I am then using the code in extractfeaturesdump.py to read a CSV of sentences that have been classified (classifySentences.csv) and dump out the features using extractfeatures.py into a Dump CSV (featuresDump.csv).

featuresDump.csv can be found in the dumps folder in the project structure.

Re-Build the SciKit-Learn ML Model

I have created a CSV file with sentences that have been classified into S - Sentence, Q - Question, C - Clause

This CSV is named as classifySentences.csv and can be found in the data folder in the project structure.

I am then using the code in extractfeaturesdump.py to read a CSV of sentences that have been classified (classifySentences.csv) and dump out the features using extractfeatures.py into a Dump CSV (featuresDump.csv).

featuresDump.csv can be found in the dumps folder in the project structure.

Re-Build the SciKit-Learn ML Model

Due to few binary compatibility issues, usually the ML model must be re-built. Execute the following command:

python generaterandomforestmodel.py

(or) In Intellij, right click and run the file generaterandomforestmodel.py

This reads in training data from ./dumps/featuresDump.csv and writes it out to ./RFmodel.ml

Step 2: Test DB Connectivity and Setup DB Tables

To test database connectivity and setup DB Tables required by our SmartBot, use the following Python Commands:

python pingdatabase.py python setupdatabase.py

(or) If you prefer Intellij:

To Test DB Connectivity, right click and run the file pingdatabase.py

To Setup DB Tables required by our SmartBot, right click and run the file setupdatabase.py.

Also note that at any time if you wish to clean up the database and start afresh, you can execute the file setupdatabase.py.

Also note that at any time if you wish to clean up the database and start afresh, you can execute the file setupdatabase.py.

Step 3: Create files required by the Chatbot

1. Main Chatbot logic is present in chatlogic.py. Here is chatlogic.py:

import hashlib

import os

import pickle

import random

import re

import string

from collections import Counter

from math import sqrt

from string import punctuation

from nltk.parse.stanford import StanfordDependencyParser

weight = 0

import helpers # General utils including config params and database connection

import extractfeatures # module for extracting features from sentence to use with ML models

conf = helpers.get_config()

ACCURACY_THRESHOLD = 0.03

NO_CHAT_DATA = "Sorry, I do not know what to say."

NO_ANSWER_DATA = "Sorry, I cannot find an answer to that."

STATEMENT_STORED = ["Thanks, I've made a note of that.",

"Thanks for telling me that.",

"OK, I've stored that information.",

"OK, I've made a note of that."]

toBool = lambda str: True if str == "True" else False

## Get Config from Config.ini File ##

DEBUG_ASSOC = toBool(conf["DEBUG"]["assoc"])

DEBUG_WEIGHT = toBool(conf["DEBUG"]["weight"])

DEBUG_ITEMID = toBool(conf["DEBUG"]["itemid"])

DEBUG_MATCH = toBool(conf["DEBUG"]["match"])

DEBUG_ANSWER = toBool(conf["DEBUG"]["answer"])

JAVA_HOME = conf["Java"]["bin"]

STANFORD_NLP = conf["StanfordNLP"]["corejar"]

STANFORD_MODELS = conf["StanfordNLP"]["modelsjar"]

RF_MODEL_LOCATION = './RFmodel.ml'

os.environ['JAVAHOME'] = JAVA_HOME # Set this to where the JDK is

## End of Config ##

#Strip non-alpha chars out - basic protection for SQL strings built out of concat ops

##clean = lambda str: ''.join(ch for ch in str if ch.isalnum())

def hashtext(stringText):

"""Return a string with first 16 numeric chars from hashing a given string

"""

#hashlib md5 returns same hash for given string each time

return hashlib.md5(str(stringText).encode('utf-8')).hexdigest()[:16]

def get_or_add_item(entityName, text, cursor):

"""Retrieve an entity's unique ID from the database, given its associated text.

If the row is not already present, it is inserted.

The entity can either be a sentence or a word."""

#entityName = clean(entityName)

#text = clean(text)

tableName = entityName + 's'

columnName = entityName

alreadyExists = False

#check whether 16-char hash of this text exists already

hashid = hashtext(text)

SQL = 'SELECT hashid FROM ' + tableName + ' WHERE hashID = %s'

if (DEBUG_ITEMID == True): print("DEBUG ITEMID: " + SQL)

cursor.execute(SQL, (hashid))

row = cursor.fetchone()

if row:

if (DEBUG_ITEMID == True): print("DEBUG ITEMID: item found, just return hashid:",row["hashid"], " for ", text )

alreadyExists = True

return row["hashid"], alreadyExists

else:

if (DEBUG_ITEMID == True): print("DEBUG ITEMID: no item found, insert new hashid into",tableName, " hashid:", hashid, " text:",text )

SQL = 'INSERT INTO ' + tableName + ' (hashid, ' + columnName + ') VALUES (%s, %s)'

alreadyExists = False

cursor.execute(SQL, (hashid, text))

return hashid, alreadyExists

def get_words(text):

"""Retrieve the words present in a given string of text.

The return value is a list of tuples where the first member is a lowercase word,

and the second member the number of time it is present in the text. Example:

IN: "Did the cow jump over the moon?"

OUT: dict_items([('cow', 1), ('jump', 1), ('moon', 1), ('?', 1), ('over', 1), ('the', 2), ('did', 1)])

"""

puncRegexp = re.compile('[%s]' % re.escape(string.punctuation))

text = puncRegexp.sub('',text )

wordsRegexpString = '\w+'

wordsRegexp = re.compile(wordsRegexpString)

wordsList = wordsRegexp.findall(text.lower())

return Counter(wordsList).items()

def set_association(words, sentence_id, cursor):

""" Pass in "words" which is a list of tuples - each tuple is word,count

("a_word" and count of occurences - i.e. ("the", 3) means the occurred 3 times in sentence)

Nothing is returned by this function - it just updates the associations table in the database

If current association for a word_id is 0, a new word-sentence association is added

If current association for a word_id is > 0, the word-sentence association is updated with a new weight

which is just the existing association weight (passed back by get_association) and the new weight

"""

words_length = sum([n * len(word) for word, n in words]) # int giving number of chars in words

# Looping through Bot-Words, associating them with Human Sentence

for word, n in words:

word_id, exists = get_or_add_item('word', word, cursor) # if the ID doesn't exist, a new word + hash ID is inserted

weight = sqrt(n / float(words_length)) # repeated words get higher weight. Longer sentences reduces their weight

#Association shows that a Bot-Word is associated with a Human-Sentence

# Bot learns by associating our responses with its words

association = get_association(word_id,sentence_id, cursor)

if association > 0:

if (DEBUG_ASSOC == True): print("DEBUG_ASSOC: got an association for", word, " value: ", association, " with sentence_id:", sentence_id)

SQL = 'UPDATE associations SET weight = %s WHERE word_id = %s AND sentence_id = %s'

if (DEBUG_ASSOC == True): print("DEBUG_ASSOC:", SQL, weight, word_id, sentence_id)

cursor.execute(SQL, (association+weight, word_id, sentence_id))

else:

SQL = 'INSERT INTO associations (word_id, sentence_id, weight) VALUES (%s, %s, %s)'

if (DEBUG_ASSOC == True): print("DEBUG_ASSOC:", SQL,word_id, sentence_id, weight)

cursor.execute(SQL, (word_id, sentence_id, weight))

def get_association(word_id,sentence_id, cursor):

"""Get the weighting associating a Word with a Sentence-Response

If no association found, return 0

This is called in the set_association routine to check if there is already an association

associations are referred to in the get_matches() fn, to match input sentences to response sentences

"""

SQL = 'SELECT weight FROM associations WHERE word_id =%s AND sentence_id =%s'

if (DEBUG_ASSOC == True): print("DEBUG_ASSOC:", SQL,word_id, sentence_id)

cursor.execute(SQL, (word_id,sentence_id))

row = cursor.fetchone()

if row:

weight = row["weight"]

else:

weight = 0

return weight

def retrieve_matches(words, cursor):

""" Retrieve the most likely sentence-response from the database

pass in humanWords, calculate a weighting factor for different sentences based on data in

associations table.

passback ordered list of results (maybe only need to return single row?)

"""

results = []

listSize = 10

cursor.execute('DELETE FROM results WHERE connection_id = connection_id()')

# calc "words_length" for weighting calc

words_length = sum([n * len(word) for word, n in words])

if (DEBUG_MATCH == True): print("DEBUG_MATCH: words list", words, " words_length:", words_length )

for word, n in words:

#weight = sqrt(n / float(words_length)) # repeated words get higher weight. Longer sentences reduces their weight

weight = (n / float(words_length))

SQL = 'INSERT INTO results \

SELECT connection_id(), associations.sentence_id, sentences.sentence, %s * associations.weight/(1+sentences.used) \

FROM words \

INNER JOIN associations ON associations.word_id=words.hashid \

INNER JOIN sentences ON sentences.hashid=associations.sentence_id \

WHERE words.word = %s'

if (DEBUG_MATCH == True): print("DEBUG_MATCH: ", SQL, " weight = ",weight , "word = ", word)

cursor.execute(SQL, (weight, word))

if (DEBUG_MATCH == True): print("DEBUG_MATCH: ", SQL)

cursor.execute('SELECT sentence_id, sentence, SUM(weight) AS sum_weight \

FROM results \

WHERE connection_id = connection_id() \

GROUP BY sentence_id, sentence \

ORDER BY sum_weight DESC')

# Fetch an ordered "listSize" number of results

for i in range(0,listSize):

row = cursor.fetchone()

if row:

results.append([row["sentence_id"], row["sentence"], row["sum_weight"]])

if (DEBUG_MATCH == True): print("**",[row["sentence_id"], row["sentence"], row["sum_weight"]],"\n")

else:

break

cursor.execute('DELETE FROM results WHERE connection_id = connection_id()')

return results

def feedback_stats(sentence_id, cursor, previous_sentence_id = None, sentiment = True):

"""

Feedback usage of sentence stats, tune model based on user response.

"""

SQL = 'UPDATE sentences SET used=used+1 WHERE hashid=%s'

cursor.execute(SQL, (sentence_id))

def train_me(inputSentence, responseSentence, cursor):

inputWords = get_words(inputSentence) #list of tuples of words + occurrence count

responseSentenceID, exists = get_or_add_item('sentence', responseSentence, cursor)

set_association(inputWords, responseSentenceID, cursor)

def sentence_rf_class(sentence):

"""

Pass in a sentence, with unique ID and pass back a classification code

Use a pre-built Random Forest model to determine classification based on

features extracted from the sentence.

"""

# Load a pre-built Random Forest Model

with open(RF_MODEL_LOCATION, 'rb') as f:

rf = pickle.load(f)

id = hashtext(sentence) #features needs an ID passing in at moment - maybe redundant?

fseries = extractfeatures.features_series(extractfeatures.features_dict(id, sentence))

width = len(fseries)

fseries = fseries[1:width-1] #All but the first and last item (strip ID and null class off)

#Get a classification prediction from the Model, based on supplied features

sentence_class = rf.predict([fseries])[0].strip()

return sentence_class

def get_grammar(sentence):

"""

Use Stanford CoreNLP to extract grammar from Stanford NLP Java utility

Return

root topic (lower-case string - "Core"),

subj (list with main subj first, compounds after)

obj (list with main obj first, compounds after)

"""

os.environ['JAVAHOME'] = JAVA_HOME # Set this to where the JDK is

dependency_parser = StanfordDependencyParser(path_to_jar=STANFORD_NLP, path_to_models_jar=STANFORD_MODELS)

regexpSubj = re.compile(r'subj')

regexpObj = re.compile(r'obj')

regexpMod = re.compile(r'mod')

regexpNouns = re.compile("^N.*|^PR.*")

sentence = sentence.lower()

#return grammar Compound Modifiers for given word

def get_compounds(triples, word):

compounds = []

for t in triples:

if t[0][0] == word:

if t[2][1] not in ["CC", "DT", "EX", "LS", "RP", "SYM", "TO", "UH", "PRP"]:

compounds.append(t[2][0])

mods = []

for c in compounds:

mods.append(get_modifier(triples, c))

compounds.append(mods)

return compounds

def get_modifier(triples, word):

modifier = []

for t in triples:

if t[0][0] == word:

if regexpMod.search(t[1]):

modifier.append(t[2][0])

return modifier

#Get grammar Triples from Stanford Parser

result = dependency_parser.raw_parse(sentence)

dep = next(result) # get next item from the iterator result

#Get word-root or "topic"

root = [dep.root["word"]]

root.append(get_compounds(dep.triples(), root[0]))

root.append(get_modifier(dep.triples(), root[0]))

subj = []

obj = []

lastNounA = ""

lastNounB = ""

for t in dep.triples():

if regexpSubj.search(t[1]):

subj.append(t[2][0] )

subj.append(get_compounds(dep.triples(),t[2][0]))

if regexpObj.search(t[1]):

obj.append(t[2][0])

obj.append(get_compounds(dep.triples(),t[2][0]))

if regexpNouns.search(t[0][1]):

lastNounA = t[0][0]

if regexpNouns.search(t[2][1]):

lastNounB = t[2][0]

return list(helpers.flatten([root])), list(helpers.flatten([subj])), list(helpers.flatten([obj])), list(helpers.flatten([lastNounA])), list(helpers.flatten([lastNounB]))

def store_statement(sentence, cursor):

#Write the sentence to SENTENCES with hashid = id, used = 1 OR update used if already there

sentence_id, exists = get_or_add_item('sentence', sentence, cursor)

SQL = 'UPDATE sentences SET used=used+1 WHERE hashid=%s'

cursor.execute(SQL, (sentence_id))

#If the sentence already exists, assume the statement grammar is already there

if not exists:

topic, subj,obj,lastNounA, lastnounB = get_grammar(sentence)

lastNouns = lastNounA + lastnounB

#topic

for word in topic:

word_id, exists = get_or_add_item('word', word, cursor)

SQL = "INSERT INTO statements (sentence_id, word_id, class) VALUES (%s, %s, %s) "

cursor.execute(SQL, (sentence_id, word_id, 'topic'))

#subj

for word in subj:

word_id, exists = get_or_add_item('word', word, cursor)

SQL = "INSERT INTO statements (sentence_id, word_id, class) VALUES (%s, %s, %s) "

cursor.execute(SQL, (sentence_id, word_id, 'subj'))

#obj

for word in obj:

word_id, exists = get_or_add_item('word', word, cursor)

SQL = "INSERT INTO statements (sentence_id, word_id, class) VALUES (%s, %s, %s) "

cursor.execute(SQL, (sentence_id, word_id, 'obj'))

#lastNouns

for word in lastNouns:

word_id, exists = get_or_add_item('word', word, cursor)

SQL = "INSERT INTO statements (sentence_id, word_id, class) VALUES (%s, %s, %s) "

cursor.execute(SQL, (sentence_id, word_id, 'nouns'))

def get_answer(sentence, cursor):

""" Retrieve the most likely question-answer response from the database

pass in humanWords "sentence", extract a grammar for it, query from statements

table based on subject and other grammar components,

passback ordered list of results , up to "listSize" in size

"""

results = []

listSize = 10

topic,subj,obj,lastNounA,lastNounB = get_grammar(sentence)

subj_topic = subj + topic

subj_obj = subj + obj

full_grammar = topic + subj + obj + lastNounA + lastNounB

full_grammar_in = ' ,'.join(list(map(lambda x: '%s', full_grammar))) # SQL in-list fmt

subj_in = ' ,'.join(list(map(lambda x: '%s', subj_topic))) # SQL in-list fmt

if (DEBUG_ANSWER == True): print("DEBUG_ANSWER: grammar: SUBJ", subj, " TOPIC", topic, " OBJ:", obj, " L-NOUNS:", lastNounA + lastNounB)

if (DEBUG_ANSWER == True): print("DEBUG_ANSWER: subj_in", subj_in, "\nsubj_topic", subj_topic, "\nfull_grammar_in", full_grammar_in, "\nfull_grammer", full_grammar)

SQL1 = """SELECT count(*) score, statements.sentence_id sentence_id, sentences.sentence

FROM statements

INNER JOIN words ON statements.word_id = words.hashid

INNER JOIN sentences ON sentences.hashid = statements.sentence_id

WHERE words.word IN (%s) """

SQL2 = """

AND statements.sentence_id in (

SELECT sentence_id

FROM statements

INNER JOIN words ON statements.word_id = words.hashid

WHERE statements.class in ('subj','topic') -- start with subset of statements covering question subj/topic

AND words.word IN (%s)

)

GROUP BY statements.sentence_id, sentences.sentence

ORDER BY score desc

"""

SQL1 = SQL1 % full_grammar_in

SQL2 = SQL2 % subj_in

SQL = SQL1 + SQL2

#if (DEBUG_ANSWER == True): print("SQL: ", SQL, "\n args full_grammer_in: ", full_grammar_in, "\n args subj_in", subj_in)

cursor.execute(SQL, full_grammar + subj_topic)

for i in range(0,listSize):

row = cursor.fetchone()

if row:

results.append([row["sentence_id"], row["score"], row["sentence"]])

if (DEBUG_ANSWER == True): print("DEBUG_ANSWER: ", row["sentence_id"], row["score"], row["sentence"])

else:

break

# increment score for each subject / object match - sentence words are in row[2] col

i = 0

top_score = 0 # top score

for row in results:

word_count_dict = get_words(row[2])

subj_obj_score = sum( [value for key, value in word_count_dict if key in subj_obj] )

results[i][1] = results[i][1] + subj_obj_score

if results[i][1] > top_score: top_score = results[i][1]

i = i + 1

#filter out the top-score results

results = [l for l in results if l[1] == top_score]

return results

def chat_flow(cursor, humanSentence, weight):

trainMe = False # if true, the bot requests some help

checkStore = False # if true, the bot checks if we want to store this as a fact

humanWords = get_words(humanSentence)

weight = 0

#Get the sentence classification based on RF model

classification = sentence_rf_class(humanSentence)

## Statement ##

if classification == 'S':

# Verify - do we want to store it?

checkStore = True

botSentence = "OK, I think that is a Statement."

##store_statement(humanSentence, cursor)

##botSentence = random.choice(STATEMENT_STORED)

## Question

elif classification == 'Q':

answers = get_answer(humanSentence, cursor)

if len(answers) > 0:

answer = ""

weight = int(answers[0][1])

if weight > 1:

for a in answers:

answer = answer + "\n" + a[2]

botSentence = answer

else:

botSentence = NO_ANSWER_DATA

else:

botSentence = NO_ANSWER_DATA

## Chat ##

elif classification == 'C':

# Take the human-words and try to find a matching response based on a weighting-factor

chat_matches = retrieve_matches(humanWords, cursor) #get_matches returns ordered list of matches for words:

if len(chat_matches) == 0:

botSentence = NO_CHAT_DATA

trainMe = True

else:

sentence_id, botSentence, weight = chat_matches[0]

if weight > ACCURACY_THRESHOLD:

# tell the database the sentence has been used and other feedback

feedback_stats(sentence_id, cursor)

train_me(botSentence, humanSentence, cursor)

else:

botSentence = NO_CHAT_DATA

trainMe = True

else:

raise RuntimeError('unhandled sentence classification') from error

return botSentence, weight, trainMe, checkStore

if __name__ == "__main__":

conf = helpers.get_config()

regexpYes = re.compile(r'yes')

DBHOST = conf["MySQL"]["server"]

DBUSER = conf["MySQL"]["dbuser"]

DBNAME = conf["MySQL"]["dbname"]

print("Starting Bot...")

# initialize the connection to the database

print("Connecting to database...")

connection = helpers.db_connection(DBHOST, DBUSER, DBNAME)

cursor = connection.cursor()

connectionID = helpers.db_connectionid(cursor)

print("...connected")

trainMe = False

checkStore = False

botSentence = 'Hello!'

while True:

# Output bot's message

if DEBUG_WEIGHT:

print('Bot> ' + botSentence + ' DEBUG_WEIGHT:' + str(round(weight,5) ) )

else:

print('Bot> ' + botSentence)

if trainMe:

print('Bot> Please can you train me - enter a response for me to learn (Enter to Skip)' )

previousSentence = humanSentence

humanSentence = input('>>> ').strip()

if len(humanSentence) > 0:

train_me(previousSentence, humanSentence, cursor)

print("Bot> Thanks I've noted that" )

else:

print("Bot> OK, moving on..." )

trainMe = False

if checkStore:

print('Bot> Shall I store that as a fact for future reference? ("yes" to store)' )

previousSentence = humanSentence

humanSentence = input('>>> ').strip()

if regexpYes.search(humanSentence.lower()):

#Store previous Sentence

store_statement(previousSentence, cursor)

print(random.choice(STATEMENT_STORED))

else:

print("Bot> OK, moving on..." )

checkStore = False

# Ask for user input; if blank line, exit the loop

humanSentence = input('>>> ').strip()

if humanSentence == '' or humanSentence.strip(punctuation).lower() == 'quit' or humanSentence.strip(punctuation).lower() == 'exit':

break

botSentence, weight, trainMe, checkStore = chat_flow(cursor, humanSentence, weight)

connection.commit()

2. Helper utilities is present in helpers.py. Here is helpers.py:

import configparser

import datetime

import os

import sys

import pymysql # http://pymysql.readthedocs.io/en/latest/

# https://github.com/PyMySQL/PyMySQL

class ConfigFileAccessError(Exception):

pass

def fileexists(CONFIGFILE):

return(os.path.isfile(CONFIGFILE) )

def get_config():

""" Load parameter and configuration values from the CONFIGFILE

A nested dictionary is passed back in following format

{"ConfigClass" : { param1 : value, param2 : value ... }

The config file is in standard Python .ini fmt, EG:

[MySQL]

server: 192.168.56.100

dbuser: simplebot

dbname: simplebot

dbcharset: utf8mb4

The above example can then be ref'd:

config = utils.get_config()

username = config["MySQL"]["dbuser"]

"""

CONFIGFILE = "./config/config.ini"

Config = configparser.ConfigParser()

config = {} # Dictionary of "section" keys. Each value is a sub-dict of key-vals

if fileexists(CONFIGFILE):

Config.read(CONFIGFILE)

for section in Config.sections():

subdict = {}

options = Config.options(section)

for option in options:

key = option

val = Config.get(section,option)

subdict[option] = Config.get(section,option)

config[section] = subdict

else:

raise ConfigFileAccessError(CONFIGFILE)

return config

def query_yes_no(question, default="yes"):

"""Ask a yes/no question via raw_input() and return their answer.

The "answer" return value is True for "yes" or False for "no".

- a Cut-and-Paste piece of code from Stack Overflow

"""

valid = {"yes": True, "y": True, "ye": True,

"no": False, "n": False}

if default is None:

prompt = " [y/n] "

elif default == "yes":

prompt = " [Y/n] "

elif default == "no":

prompt = " [y/N] "

else:

raise ValueError("invalid default answer: '%s'" % default)

while True:

sys.stdout.write(question + prompt)

choice = input().lower()

if default is not None and choice == '':

return valid[default]

elif choice in valid:

return valid[choice]

else:

sys.stdout.write("Please respond with 'yes' or 'no' "

"(or 'y' or 'n').\n")

# Flatten out a list of lists (taken from SO: http://stackoverflow.com/questions/10823877/what-is-the-fastest-way-to-flatten-arbitrarily-nested-lists-in-python

def flatten(container):

for i in container:

if isinstance(i, (list,tuple)):

for j in flatten(i):

yield j

else:

yield i

def db_connection(host, user, dbname, charset = "utf8mb4"):

"""

Connect to a MySQL Database Server

connection = pymysql.connect(host = host

, user = user

, password = password

, db = dbname

, charset = charset

, cursorclass=pymysql.cursors.DictCursor)

"""

connection = pymysql.connect(host = host

, user = user

, db = dbname

, charset = charset

, cursorclass=pymysql.cursors.DictCursor)

return connection

def db_connectionid(cursor):

cursor.execute('SELECT connection_id()', (None))

value = cursor.fetchone()["connection_id()"]

return(value)

def timestamp_string():

timestamp_string = str(datetime.datetime.now().strftime("%Y-%m-%d %H:%M"))

return(timestamp_string)

3. As mentioned above, I am then using the code in extractfeaturesdump.py to read a CSV of sentences that have been classified (classifySentences.csv) and dump out the features using extractfeatures.py into a Dump CSV (featuresDump.csv).

Here is extractfeaturesdump.py

Here is extractfeaturesdump.py

##################################################################

# Use the extractfeatures.py file to dump out features

# Read a CSV of sentences and bulk-dump to featuresDump.csv of features

##################################################################

#Input CSV fmt: 1st field is sentence ID, 2nd field is text to process, 3rd field is class

import csv

import sys

import hashlib

import extractfeatures # extractfeatures.py is bepoke util to extract NLTK POS features from sentences

if len(sys.argv) > 1:

FNAME = sys.argv[1]

else:

FNAME = './data/classifySentences.csv'

print("reading input from ", FNAME)

if len(sys.argv) > 2:

FOUT = sys.argv[2]

else:

FOUT = './dumps/featuresDump.csv'

print("Writing output to ", FOUT)

fin = open(FNAME, 'rt')

fout = open(FOUT, 'wt', newline='')

keys = ["id",

"wordCount",

"stemmedCount",

"stemmedEndNN",

"CD",

"NN",

"NNP",

"NNPS",

"NNS",

"PRP",

"VBG",

"VBZ",

"startTuple0",

"endTuple0",

"endTuple1",

"endTuple2",

"verbBeforeNoun",

"qMark",

"qVerbCombo",

"qTripleScore",

"sTripleScore",

"class"]

reader = csv.reader(fin)

loopCount = 0

next(reader) #Assume we have a header

for line in reader:

sentence = line[0]

c = line[1] #class-label

id = hashlib.md5(str(sentence).encode('utf-8')).hexdigest()[:16] # generate a unique ID

output = ""

header = ""

f = extractfeatures.features_dict(id,sentence, c)

for key in keys:

value = f[key]

header = header + ", " + key

output = output + ", " + str(value)

if loopCount == 0: # only extract and print header for first dict item

header = header[1:] #strip the first ","" off

print(header)

fout.writelines(header + '\n')

output = output[1:] #strip the first ","" off

loopCount = loopCount + 1

print(output)

fout.writelines(output + '\n')

fin.close()

fout.close()

4. To extract features from sentences using NLTK use the file extractfeatures.py. Here is extractfeatures.py:##############################################

# pass in a sentence, pass out it's features #

##############################################

import nltk

from nltk import word_tokenize

lemma = nltk.wordnet.WordNetLemmatizer()

sno = nltk.stem.SnowballStemmer('english')

from nltk.corpus import stopwords

import pandas as pd # Use Pandas to create pandas Series in features_series()

import sys

import hashlib

import re

import string

import itertools

line = ["xxx","Oracle 12.2 will be released for on-premises users on 15 March 2017",0,"S"]

pos = [] #list of PartsOfSpeech

output = "" #comma separated string

header = "" #string for describing features header

VerbCombos = ['VB',

'VBD',

'VBG',

'VBN',

'VBP',

'VBZ',

'WDT',

'WP',

'WP$',

'WRB',

'MD']

questionTriples = ['CD-VB-VBN',

'MD-PRP-VB' ,

'MD-VB-CD' ,

'NN-IN-DT' ,

'PRP-VB-PRP' ,

'PRP-WP-NNP' ,

'VB-CD-VB' ,

'VB-PRP-WP' ,

'VBZ-DT-NN' ,

'WP-VBZ-DT' ,

'WP-VBZ-NNP' ,

'WRB-MD-VB']

statementTriples = ['DT-JJ-NN',

'DT-NN-VBZ',

'DT-NNP-NNP',

'IN-DT-NN',

'IN-NN-NNS',

'MD-VB-VBN',

'NNP-IN-NNP',

'NNP-NNP-NNP',

'NNP-VBZ-DT',

'NNP-VBZ-NNP',

'NNS-IN-DT',

'VB-VBN-IN',

'VBZ-DT-JJ']

startTuples = ['NNS-DT',

'WP-VBZ',

'WRB-MD']

endTuples = ['IN-NN',

'VB-VBN',

'VBZ-NNP']

# Because python dict's return key-vals in random order, provide ordered list to pass to ML models

feature_keys = ["id",

"wordCount",

"stemmedCount",

"stemmedEndNN",

"CD",

"NN",

"NNP",

"NNPS",

"NNS",

"PRP",

"VBG",

"VBZ",

"startTuple0",

"endTuple0",

"endTuple1",

"endTuple2",

"verbBeforeNoun",

"qMark",

"qVerbCombo",

"qTripleScore",

"sTripleScore",

"class"]

def strip_sentence(sentence):

sentence = sentence.strip(",")

sentence = ''.join(filter(lambda x: x in string.printable, sentence)) #strip out non-alpha-numerix

sentence = sentence.translate(str.maketrans('','',string.punctuation)) #strip punctuation

return(sentence)

# Pass in a list of strings (i.e. PoS types) and the sentence to check PoS types for

# check if *Any Pair Combo* of the PoS types list exists in the sentence PoS types

# return a count of occurrence

def exists_pair_combos(comboCheckList, sentence):

pos = get_pos(sentence)

tag_string = "-".join([ i[1] for i in pos ])

combo_list = []

for pair in itertools.permutations(comboCheckList,2):

if(pair[0] == "MD"): # * Kludge - strip off leading MD *

pair = ["",""]

combo_list.append("-".join(pair))

if any(code in tag_string for code in combo_list):

return 1

else:

return 0

# Parts Of Speech

def get_pos(sentence):

sentenceParsed = word_tokenize(sentence)

return(nltk.pos_tag(sentenceParsed))

# Count Q-Marks

def count_qmark(sentence):

return(sentence.count("?") )

# Count a specific POS-Type

#VBG = count_POSType(pos,'VBG')

def count_POSType(pos, ptype):

count = 0

tags = [ i[1] for i in pos ]

return(tags.count(ptype))

#if ptype in tags:

# VBG = 1

#return(VBG)

# Does Verb occur before first Noun

def exists_vb_before_nn(pos):

pos_tags = [ i[1] for i in pos ]

#Strip the Verbs to all just "V"

pos_tags = [ re.sub(r'V.*','V', str) for str in pos_tags ]

#Strip the Nouns to all just "NN"

pos_tags = [ re.sub(r'NN.*','NN', str) for str in pos_tags ]

vi =99

ni =99

mi =99

#Get first NN index

if "NN" in pos_tags:

ni = pos_tags.index("NN")

#Get first V index

if "V" in pos_tags:

vi = pos_tags.index("V")

#get Modal Index

if "MD" in pos_tags:

mi = pos_tags.index("MD")

if vi < ni or mi < ni :

return(1)

else:

return(0)

# Stemmed sentence ends in "NN-NN"?

def exists_stemmed_end_NN(stemmed):

stemmedEndNN = 0

stemmed_end = get_first_last_tuples(" ".join(stemmed))[1]

if stemmed_end == "NN-NN":

stemmedEndNN = 1

return(stemmedEndNN)

# Go through the predefined list of start-tuples, 1 / 0 if given startTuple occurs in the list

def exists_startTuple(startTuple):

exists_startTuples = []

for tstring in startTuples: #startTuples defined as global var

if startTuple in tstring:

exists_startTuples.append(1)

else:

exists_startTuples.append(0)

return(exists_startTuples)

# Go through the predefined list of end-tuples, 1 / 0 if given Tuple occurs in the list

def exists_endTuple(endTuple):

exists_endTuples = []

for tstring in endTuples: #endTuples defined as global var

if endTuple in tstring:

exists_endTuples.append(1)

else:

exists_endTuples.append(0)

return(exists_endTuples)

#loop round list of triples and construct a list of binary 1/0 vals if triples occur in list

def exists_triples(triples, tripleSet):

exists = []

for tstring in tripleSet:

if tstring in triples:

exists.append(1)

else:

exists.append(0)

return(exists)

# Get a sentence and spit out the POS triples

def get_triples(pos):

list_of_triple_strings = []

pos = [ i[1] for i in pos ] # extract the 2nd element of the POS tuples in list

n = len(pos)

if n > 2: # need to have three items

for i in range(0,n-2):

t = "-".join(pos[i:i+3]) # pull out 3 list item from counter, convert to string

list_of_triple_strings.append(t)

return list_of_triple_strings

def get_first_last_tuples(sentence):

first_last_tuples = []

sentenceParsed = word_tokenize(sentence)

pos = nltk.pos_tag(sentenceParsed) #Parts Of Speech

pos = [ i[1] for i in pos ] # extract the 2nd element of the POS tuples in list

n = len(pos)

first = ""

last = ""

if n > 1: # need to have three items

first = "-".join(pos[0:2]) # pull out first 2 list items

last = "-".join(pos[-2:]) # pull out last 2 list items

first_last_tuples = [first, last]

return first_last_tuples

def lemmatize(sentence):

"""

pass in a sentence as a string, return just core text that has been "lematised"

stop words are removed - could effect ability to detect if this is a question or answer

- depends on import lemma = nltk.wordnet.WordNetLemmatizer() and from nltk.corpus import stopwords

"""

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(sentence)

filtered_sentence = []

for w in word_tokens:

if w not in stop_words:

filtered_sentence.append(w.lower()) # also set lowercase

lem = []

for w in filtered_sentence:

lem.append(lemma.lemmatize(w))

return lem

def stematize(sentence):

"""

pass in a sentence as a string, return just core text stemmed

stop words are removed - could effect ability to detect if this is a question or answer

- depends on import sno = nltk.stem.SnowballStemmer('english') and from nltk.corpus import stopwords

"""

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(sentence)

filtered_sentence = []

for w in word_tokens:

if w not in stop_words:

filtered_sentence.append(w)

stemmed = []

for w in filtered_sentence:

stemmed.append(sno.stem(w))

return stemmed

#########################################################################

# A wrapper function to put it all together - build a csv line to return

# A header string is also returned for optional use

def get_string(id,sentence,c="X"):

header,output = "",""

pos = get_pos(sentence)

qMark = count_qmark(sentence) #count Qmarks before stripping punctuation

sentence = strip_sentence(sentence)

#lemmed = lemmatize(sentence)

stemmed = stematize(sentence)

wordCount = len(sentence.split())

stemmedCount = len(stemmed)

qVerbCombo = exists_pair_combos(VerbCombos,sentence)

verbBeforeNoun = exists_vb_before_nn(pos)

output = id + "," + str(wordCount) + "," + str(stemmedCount) + "," + str(qVerbCombo)+ "," + str(qMark) + "," + str(verbBeforeNoun)

header = header + "id,wordCount,stemmedCount,qVerbCombo,qMark,verbBeforeNoun"

# list of POS-TYPES to count , generate a list of counts in the CSV line

for ptype in ["VBG", "VBZ", "NNP", "NN", "NNS", "NNPS","PRP", "CD" ]:

output = output + "," + str( count_POSType(pos,ptype) )

header = header + "," + ptype

output = output + "," + str(exists_stemmed_end_NN(stemmed))

header = header + ",StemmedEndNN,"

## get Start Tuples and End Tuples Features ##

startTuple,endTuple = get_first_last_tuples(sentence)

l = exists_startTuple(startTuple) #list [1/0] for exists / not exists

output = output + "," + ",".join(str(i) for i in l)

for i in range(0,len(l)):

header = header + "startTuple" + str(i+1) + ","

l = exists_endTuple(endTuple) #list [1/0] for exists / not exists

output = output + "," + ",".join(str(i) for i in l)

for i in range(0,len(l)):

header = header + "endTuple" + str(i+1) + ","

## look for special Triple Combinations ##

triples = get_triples(pos) # all the triple sequences in the sentence POS list

l = exists_triples(triples, questionTriples)

total = sum(l)

output = output + "," + str(total)

header = header + "qTripleScore" + ","

l = exists_triples(triples, statementTriples)

total = sum(l)

output = output + "," + str(total)

header = header + "sTripleScore" + ","

output = output + "," + c #Class Type on end

header = header + "class"

return output,header

# End of Get String wrapper

#########################################################################

# Build a dictionary of features

def features_dict(id,sentence,c="X"):

features = {}

pos = get_pos(sentence)

features["id"] = id

features["qMark"] = count_qmark(sentence) #count Qmarks before stripping punctuation

sentence = strip_sentence(sentence)

stemmed = stematize(sentence)

startTuple,endTuple = get_first_last_tuples(sentence)

features["wordCount"] = len(sentence.split())

features["stemmedCount"] = len(stemmed)

features["qVerbCombo"] = exists_pair_combos(VerbCombos,sentence)

features["verbBeforeNoun"] = exists_vb_before_nn(pos)

for ptype in ["VBG", "VBZ", "NNP", "NN", "NNS", "NNPS","PRP", "CD" ]:

features[ptype] = count_POSType(pos,ptype)

features["stemmedEndNN"] = exists_stemmed_end_NN(stemmed)

l = exists_startTuple(startTuple) #list [1/0] for exists / not exists

for i in range(0,len(l)):

features["startTuple" + str(i)] = l[i]

l = exists_endTuple(endTuple) #list [1/0] for exists / not exists

for i in range(0,len(l)):

features["endTuple" + str(i)] = l[i]

## look for special Triple Combinations ##

triples = get_triples(pos) # all the triple sequences in the sentence POS list

l = exists_triples(triples, questionTriples) # a list of 1/0 for hits on this triple-set

features["qTripleScore"] = sum(l) # add all the triple matches up to get a score

l = exists_triples(triples, statementTriples) # Do same check for the Statement t-set

features["sTripleScore"] = sum(l) # add all the triple matches up to get a score

features["class"] = c #Class Type on end

return features

# pass in dict, get back series

def features_series(features_dict):

values=[]

for key in feature_keys:

values.append(features_dict[key])

features_series = pd.Series(values)

return features_series

## MAIN ##

if __name__ == '__main__':

# ID, WordCount, StemmedCount, Qmark, VBG, StemmedEnd, StartTuples, EndTuples, QuestionTriples, StatementTriples, Class

# [1/0] [NN-NN?] [3 x binary] [3 x binary] [10 x binary] [10 x binary]

print("Starting...")

c = "X" # Dummy class

header = ""

output = ""

if len(sys.argv) > 1:

sentence = sys.argv[1]

else:

sentence = line[1]

id = hashlib.md5(str(sentence).encode('utf-8')).hexdigest()[:16]

features = features_dict(id,sentence, c)

pos = get_pos(sentence) #NLTK Parts Of Speech, duplicated just for the printout

print(pos)

print(features)

for key,value in features.items():

print(key, value)

#header string

for key, value in features.items():

header = header + ", " + key #keys come out in a random order

output = output + ", " + str(value)

header = header[1:] #strip the first ","" off

output = output[1:] #strip the first ","" off

print("HEADER:", header)

print("VALUES:", output)

5. Chatbot server is a multi-threaded server and it allows multiple clients to connect to it. Here is the code for server.py:

import socket

import threading

import os

from string import punctuation

import random

import re

import logging

import chatlogic

import helpers

LOGFILE = './log/server.log'

config = helpers.get_config()

toBool = lambda str: True if str == "True" else False

DEBUG_SERVER = toBool(config["DEBUG"]["server"])

LOGGING_FMT = '%(asctime)s %(threadName)s %(message)s'

regexpYes = re.compile(r'yes')

if DEBUG_SERVER:

logging.basicConfig(filename=LOGFILE, level=logging.DEBUG, format=LOGGING_FMT)

else:

logging.basicConfig(filename=LOGFILE, level=logging.INFO, format=LOGGING_FMT)

def session(connection):

# Get Config

conf = helpers.get_config()

DBHOST = conf["MySQL"]["server"]

DBUSER = conf["MySQL"]["dbuser"]

DBNAME = conf["MySQL"]["dbname"]

logging.info("Starting Bot session-thread...")

# Initialize the database connection

logging.info(" session-thread connecting to database...")

dbconnection = helpers.db_connection(DBHOST, DBUSER, DBNAME)

dbcursor = dbconnection.cursor()

dbconnectionid = helpers.db_connectionid(dbcursor)

logging.info(" ...connected")

botSentence = 'Hello!'

weight = 0

trainMe = False

checkStore = False

def receive(connection):

logging.debug(" receive(connection): PID {}, thread {} \n".format(pid, thread))

received = connection.recv(1024)

if not received:

return False

else:

return received

while True:

pid = os.getpid()

thread = threading.current_thread()

# pass received message to chatbot

received = receive(connection)

humanSentence = received.decode().strip()

if humanSentence == '' or humanSentence.strip(punctuation).lower() == 'quit' or humanSentence.strip(punctuation).lower() == 'exit':

break

# Chatbot processing

botSentence, weight, trainMe, checkStore = chatlogic.chat_flow(dbcursor, humanSentence, weight)

logging.debug(" Received botSentence {} from chatbot.chat_flow".format(botSentence))

if trainMe:

logging.debug(" trainMe is True")

send = "Please train me by entering some information for me to learn, or reply \"skip\" to skip' ".encode()

connection.send(send)

previousSentence = humanSentence

received = receive(connection)

humanSentence = received.decode().strip()

logging.debug(" trainMe received {}".format(humanSentence))

if humanSentence != "skip":

chatlogic.train_me(previousSentence, humanSentence, dbcursor)

botSentence = "Thanks I have noted that"

else:

botSentence = "OK, moving on..."

trainMe = False

if checkStore:

logging.debug("CheckStore is True")

send = 'Shall I store this information as a fact for future reference? (Reply "yes" to store)'.encode()

connection.send(send)

previousSentence = humanSentence

received = receive(connection)

humanSentence = received.decode().strip()

logging.debug(" checkStore received {}".format(humanSentence))

if regexpYes.search(humanSentence.lower()):

#Store previous Sentence

logging.debug(" Storing...")

chatlogic.store_statement(previousSentence, dbcursor)

logging.debug(" Statement Stored.")

botSentence = random.choice(chatlogic.STATEMENT_STORED)

else:

botSentence = "OK, moving on..."

checkStore = False

dbconnection.commit()

logging.debug(" sending botSentence back: {}".format(botSentence))

send = botSentence.encode()

connection.send(send)

logging.info(" Closing Session")

if __name__ == "__main__":

logging.info("-----------------------------")

logging.info("-- Starting the BotServer --")

print("Starting the Server...")

print("Logging to: ", LOGFILE)

LISTEN_HOST = config["Server"]["listen_host"]

LISTEN_PORT = int(config["Server"]["tcp_socket"])

LISTEN_QUEUE = int(config["Server"]["listen_queue"])

# Set up the listening socket

sckt = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

sckt.bind((LISTEN_HOST, LISTEN_PORT))

sckt.listen(LISTEN_QUEUE)

print("...Socket has been set up")

logging.info("Server Listener set up on port " + str(LISTEN_PORT))

# Accept connections in a loop

while True:

logging.info("Main Server waiting for a connection")

(connection, address) = sckt.accept()

logging.info("Connect Received " + str(connection) + " " + str(address))

t = threading.Thread(target = session, args=[connection])

t.setDaemon(True) #set to Daemon status, allows CTRL-C to kill all threads

t.start()

logging.info("Closing Server listen socket on " + str(LISTEN_PORT))

sckt.close()

6. Code for a simple client that connects to the bot server is present in client.py. Here is client.py:

import socket

import select

import argparse

# arg-parse

parser = argparse.ArgumentParser(description='Interactive Chat Client using TCP Sockets')

parser.add_argument('-a', '--addr', dest = 'host', default = 'vhost1', help='remote host-name or IP address', required=True)

parser.add_argument('-p', '--port', dest = 'port', type = int, default = 3333, help='TCP port', required=True)

args = parser.parse_args()

ADDR = args.host

PORT = args.port

sckt = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

server_address = (ADDR, PORT)

print("Connecting to the server", ADDR, "at port", PORT)

print('Enter "quit" to Quit.')

sckt.connect((ADDR, PORT))

while True:

#Check that our connection is still alive before trying to do anything

try:

ready_to_read, ready_to_write, in_error = select.select([sckt,], [sckt,], [], 5)

except select.error:

sckt.shutdown(2) # 0 = done receiving, 1 = done sending, 2 = both

sckt.close()

print('connection error')

break

l = 0

while l < 1 : #put this loop in to check message has text ... catches strange CR issue on windows

message = input('>>> ').strip()

l = len(message)

if (message == "exit" or message == "quit"):

sckt.send(message.encode())

sckt.send("".encode())

break

sckt.send(message.encode())

print(sckt.recv(1024).decode())

print("Connection closed")

Step 4: Create file: setup.py

setup.py is used to to build and install the application. Add basic information about the application in setup.py. Once you have this file created, you can build and install the application using the commands:

python setup.py build python setup.py installHere is setup.py:

from setuptools import setup

setup(name='SmartBot',

version='1.0.0',

description='A Smart NLP Chatbot'

)

Run Application:

We need to run the server and client for the SmartBot to be fully functional. Here are the steps to run it in Anaconda Prompt.

1. To run the server application, in Anaconda Prompt, navigate to your project location and execute the command:

python server.py2. To run the client application, in Anaconda Prompt, navigate to your project location and execute the command:

python client.py -a localhost -p 3333

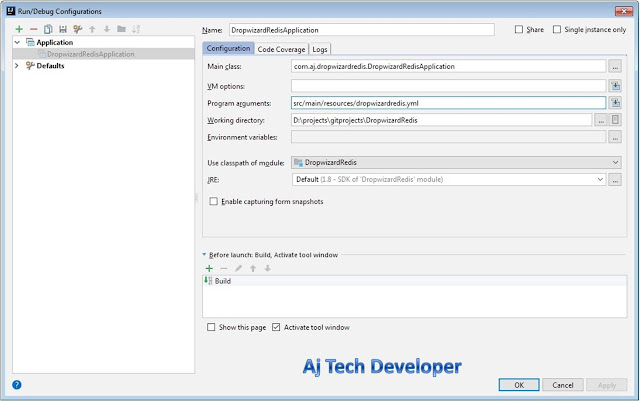

Here are the steps to run it in Intellij IDE.

1. To run the server application in Intellij IDE, right click the file server.py and click Run 'server'

2. To run client application in your IDE use:

Script parameters: -a localhost -p 3333

Sample Chat:

(base) D:\projects\gitprojects\SmartBot>python client.py -a localhost -p 3333 Connecting to the server localhost at port 3333 Enter "quit" to Quit. >>> There is a technical blog named as Software Developer Central. Shall I store this information as a fact for future reference? (Reply "yes" to store) >>> yes Thanks for telling me that. >>> Aj Tech Developer is the author of the blog Software Developer Central. Shall I store this information as a fact for future reference? (Reply "yes" to store) >>> yes Thanks for telling me that. >>> It has posts on topics such as Machine Learning, Internet of Things, Angular 5, Dropwizard, Akka HTTP, Play Framework and other trending and popular technol ogies. Shall I store this information as a fact for future reference? (Reply "yes" to store) >>> yes Thanks for telling me that. >>> Hello Please train me by entering some information for me to learn, or reply "skip" to skip' >>> Hello!!! Thanks I have noted that >>> Hello Hello!!! >>> What is the name of the blog? There is a technical blog named as Software Developer Central. >>> What posts does it have? It has posts on topics such as Machine Learning, Internet of Things, Angular 5, Dropwizard, Akka HTTP, Play Framework and other trending and popular technologie s. >>> Who is the author? Aj Tech Developer is the author of the blog Software Developer Central. >>> quit Connection closedFrom the conversation above you can see how the SmartBot is working in its 3 modes: Chat Mode, Question Mode and Statement Mode.

Conclusion:

In this post I have explained in simple steps as to how you can build your own NLP and Machine Learning chatbot. The code used in this post is available on GitHub.

Learn the most popular and trending technologies like Machine Learning, Angular 5, Internet of Things (IoT), Akka HTTP, Play Framework, Dropwizard, Docker, Netflix Eureka, Netflix Zuul, Spring Cloud, Spring Boot and Flask in simple steps by reading my most popular blog posts at Software Developer Central.

If you like my post, please feel free to share it using the share button just below this paragraph or next to the heading of the post. You can also tweet with #SoftwareDeveloperCentral on Twitter. To get a notification on my latest posts or to keep the conversation going, you can follow me on Twitter. Please leave a note below if you have any questions or comments. Learn the most popular and trending technologies like Machine Learning, Angular 5, Internet of Things (IoT), Akka HTTP, Play Framework, Dropwizard, Docker, Netflix Eureka, Netflix Zuul, Spring Cloud, Spring Boot and Flask in simple steps by reading my most popular blog posts at Software Developer Central.

Hi, i have this problem when running the client.py

ReplyDeleteFile "client.py", line 44, in

sckt.send(message.encode())

ConnectionAbortedError: [WinError 10053] An established connection was aborted by the software in your host machine

may I know what happen?Thank you

Hi @JIA JIE PANG

DeleteWhat version of Python are you using? I am using version 3.6.3. Usually older version of Python gives this error.

hi. I'm using version 3.6.4. But it still come out with error.

DeleteTraceback (most recent call last):

File "client.py", line 42, in

print(sckt.recv(1024).decode())

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xff in position 25: invalid start byte

I like your chatbot very much. Just have a question ... You trained getting data from featuredump.csv file . My concern is where are you getting this file and how is the calculation

ReplyDeleteHi @mcc.jeppiaar mcc_jeppiaar

DeleteI had missed adding a few files to the project structure. Thanks for pointing that out. I have now added those files.

I have created a CSV file with sentences that have been classified into S - Sentence, Q - Question, C - Clause

This CSV is named as classifySentences.csv and can be found in the data folder in the project structure.

I am then using the code in extractfeaturesdump.py to read a CSV of sentences that have been classified (classifySentences.csv) and dump out the features using extractfeatures.py into a Dump CSV (featuresDump.csv). I have added these details in my blog post under the heading: Creation of Training Data.